Artificial intelligence, a new era?

The new algorithms that generate images, text, voice and video are revolutionising our digital societies. To what extent and with what consequences?

Report by Adrien Denèle - Published on

Unprecedented hype

In the space of a few months, the status of artificial intelligence (AI) has shifted: it has gone from being a technology with a science-fiction flavour to a reality that threatens jobs, the reliability of information and the stability of social relations. And it’s all thanks to algorithms developed by young, hitherto unknown private companies such as OpenAI, founded at the end of 2015 – by none other than Sam Altman and Elon Musk! —ChatGPT’s parent company. This conversational AI is capable in the blink of an eye of writing long texts, summaries, poems and essays on demand, thanks to a highly user-friendly interface that is accessible to even the youngest users.

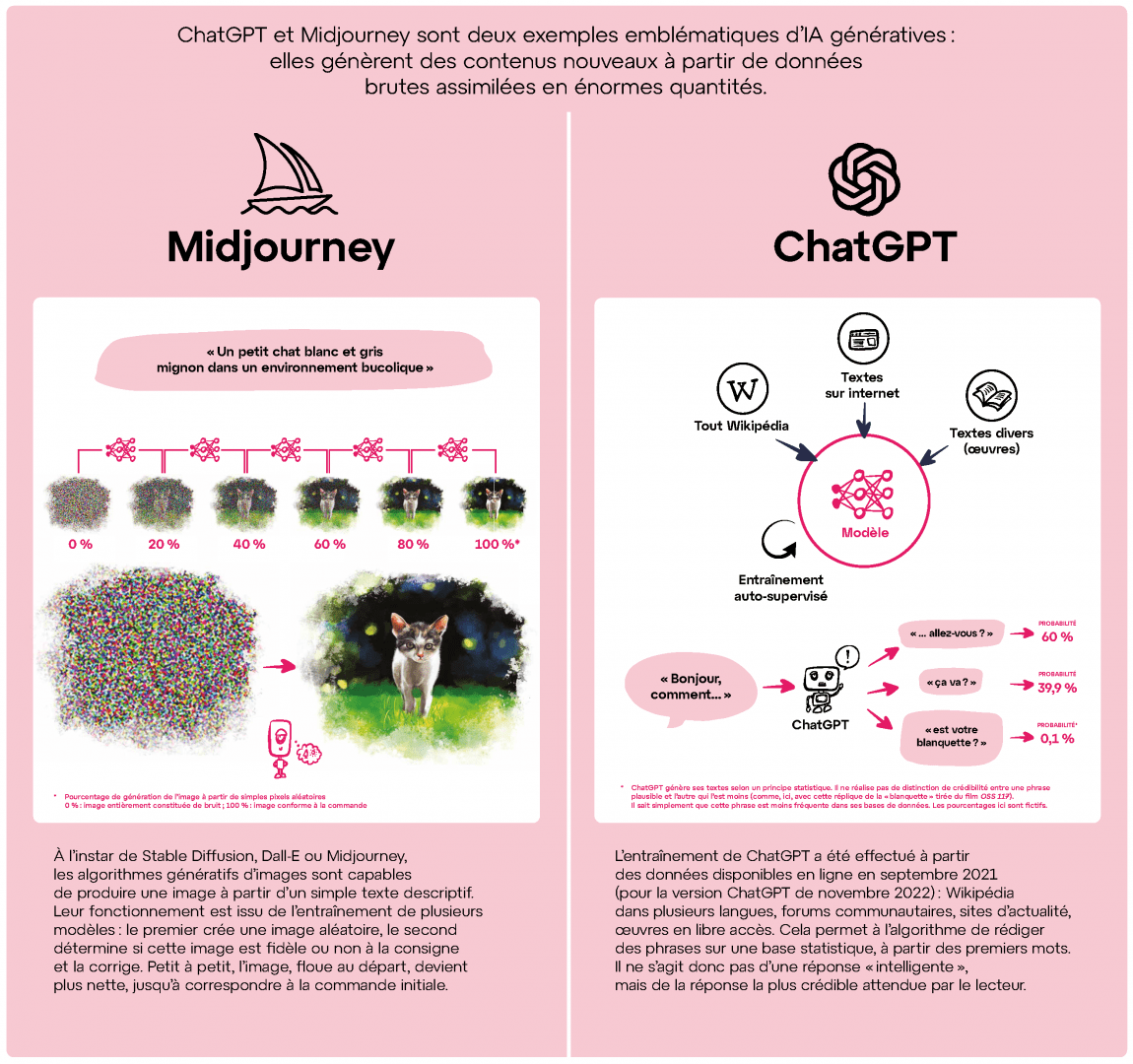

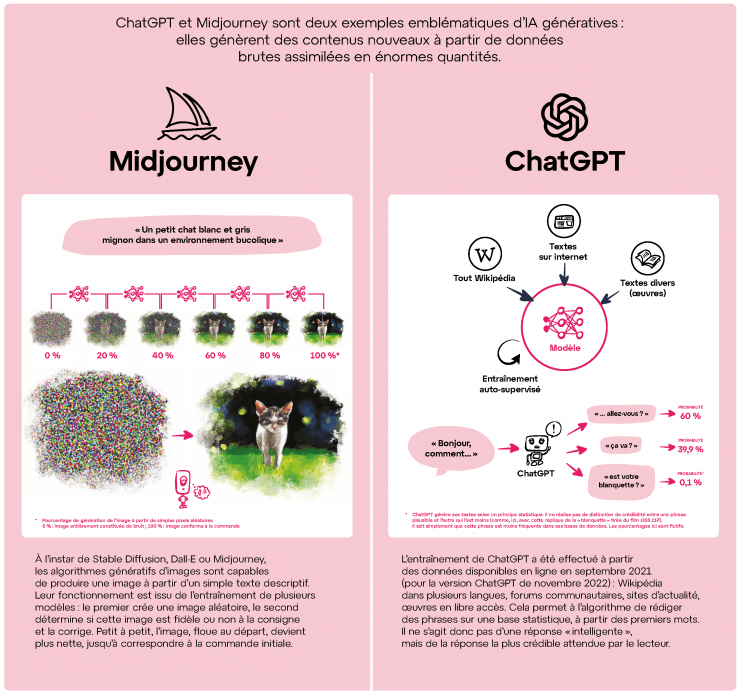

The DALL-E and Midjourney image generators create the wildest visual ideas on demand, offering photos, drawings and illustrations. DALL-E is owned by OpenAI; the Midjourney programme by the American research laboratory of the same name, which employed fewer than 20 people in July 2023! In addition to these well-known AIs, there are videos from Stable Diffusion, an open source software programme, as well as music, data processing, voice imitation apps, and more.

The simultaneous appearance of these new content generators is not due to chance, but to the emergence of new computing technologies and learning methods, coupled with mass-market interfaces. All this is enough to break the records. In January 2023, just three months after going online, ChatGPT already had more than 100 million users. This is an unprecedented level of popularity for such a young company.

Midjourney dresses the Pope

A harsh winter in the Vatican? A switch to Christian rap? Neither of these, it’s a very realistic image generated by the AI Midjourney! Of course, a discerning eye might spot some significant details: blurred contours, the hands look a little unbelievable… Other images, obviously faked, have aroused irritation or amusement, such as the one of French President Emmanuel Macron meditating in the middle of the street during the French anti-retirement protests in early 2023. But someone scrolling quickly through social media might believe these images. For example, a fake photo of an elderly man bleeding to death during the early 2023 protests in France sparked real outrage.

Artistic creation shaken up by AI

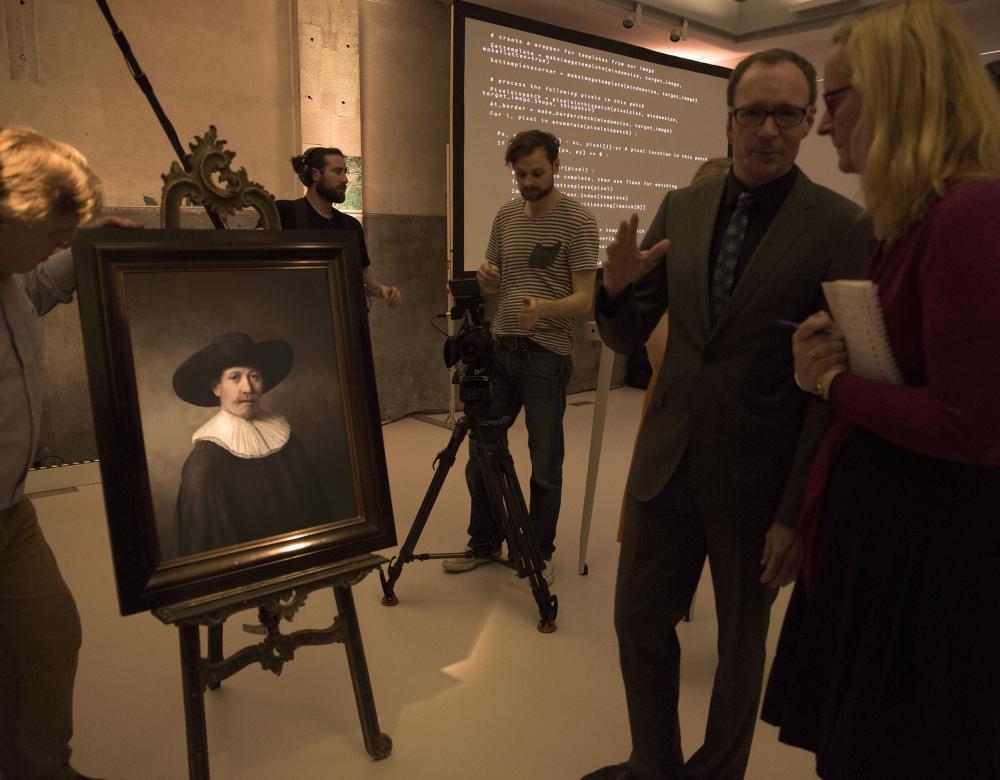

Here’s a painting by Rembrandt … that has never been seen in a museum. It’s actually from The Next Rembrandt AI project, which collected data on 346 paintings by the Dutch artist, compiled and analysed them, and then trained the algorithm in his pictorial style. But AI is above all conquering contemporary art. In late 2022, the work Théâtre d’Opéra Spatial won the Colorado State Fair digital art competition. This work was designed by Jason Allen using Midjourney, and it sparked off much debate about the nature of artistic production. The same applies to music, where a track can now be composed in just a few clicks. Will the idea behind a work now take precedence over the execution process?

A revolution for the worse… And better?

Is AI a threat to employment? Economists are still struggling to assess to what extent this may be true. In a March 2023 report, the investment firm Goldman Sachs estimated that generative AI – the branch of AI devoted to the production of original data, images, text and sound – could automate or replace up to a quarter of jobs in the United States and Europe: administrative support, legal services, architecture, engineering, etc. On the other hand, a study by the International Labour Organisation, published in August 2023, is more reassuring: generative AI would above all change “the quality of jobs”, particularly administrative jobs, thanks to the automation of tasks.

In any case, the scale and speed of the transition under way is a cause for concern. Taxes on automation have been proposed for years to accompany this transition – by legal experts and even by Bill Gates, the former head of Microsoft – but have still not been adopted.

However, the picture is not uniformly negative: AI can be used to automate tedious tasks such as data collection, writing computer code, routine translations, drafting simple contracts, etc. Sometimes, it can even improve the quality of work. This is the case with certain medical diagnoses. Developed with the Institut Curie, the Galen Breast algorithm is improving the detection of a large number of breast cancer subtypes. Another example: according to a study by the Indian foundation Lata Medical, cardiac tracings analysed by AI can accurately predict diabetes and even pre-diabetes. A promising method for low-income countries.

How can ChatGPT be used in schools?

Ever since ChatGPT first appeared on the scene, some students have been giving in to temptation. Teachers are running papers through software supposedly capable of spotting “AI writing”, with dubious results. At Texas A& M University in the United States, one professor even asked ChatGPT if it had written the essays of 15 students, to which the algorithm replied in the affirmative. Which doesn’t mean much: writing software also categorises the American Constitution or passages from the Bible among texts generated by AI! Some institutions therefore prefer to regulate usage. Sciences Po Paris authorises its use under certain conditions and in compliance with an anti-plagiarism charter.

More ethical algorithms?

AI holds up a mirror to mankind’s stereotypes, as revealed by some recurring and, to say the least, disturbing patterns from the many online requests made to Midjourney. Drawing on online databases, AI reproduces stereotypes. For example, when asked to draw a “white thief”, Midjourney drew a black man dressed in white. Misogyny was also evident in the first versions of ChatGPT, which often relegated women to the kitchen. Since then, things seem to have become more balanced, thanks to fine-tuned training monitored by image-conscious owner companies.

Where is the responsibility for algorithms?

Driverless cars opened up the debate: if an accident is inevitable, should a car run into an elderly person rather than a child? Should it sacrifice its driver and, if not, at what cost? And if so, who would want to get into such a vehicle? In short, what is the legal responsibility of AI? Recent cases of suicide have reignited this controversy. In Belgium, in March 2023, a man took his own life after long discussions with Eliza, a conversational AI. Who is responsible for such an act: the user alone, the designer of the AI model, the provider? The debate remains open. Intellectual property laws, such as copyright, are another headache. For example, artists have provided the Internet with a phenomenal quantity of works of art needed to train AIs ... free of charge and with no copyright attached. Aren’t they entitled to some form of remuneration or support? A first lawsuit was filed in June 2023 in California, USA, against OpenAI for copyright infringement. The New York Times, CNN, Radio France and TF1, among others, blocked the OpenAI robot accused of “plundering” their content. The European Union wants to establish a comprehensive legal framework to clarify responsibility. Ahead of discussions with the Member States, on 14 June 2023, Parliament adopted a text requiring transparency on the production of content by AI and the data used to train the algorithms. A subject to keep an eye on.

The mechanisms of generative artificial intelligence

ChatGPT and Midjourney are two iconic examples of generative AI: they generate new content from raw data assimilated in huge quantities (not available in English).

The Tech Giants are chasing AI

Having competed with traditional media, digital giants fear that they too will be overtaken by the emergence of AI, so they intend to play their part in its development. They are doing this not least by acquiring shares in the start-ups behind the new generative AI algorithms, which are fast becoming the goose that lays the golden egg. For example, Microsoft has invested in OpenAI, one of the new AI main players, along with ChatGPT and DALL-E, which has enabled Bill Gates’ company to integrate a conversational agent into its Bing internet browser. Capable of responding to searches with precision, it now rivals Google’s search engine, long considered unbeatable. Google’s parent company, Alphabet, seems to have been taken by surprise. Its conversational agent, bard, launched in February 2023, is struggling to convince. The giant Meta (Facebook), which is heavily involved in the metaverse, is also having difficulty competing. But the company is focusing on the open source characteristics of LLaMA, a language model that is less data-intensive than ChatGPT. Version 2 has been available since July 2023. Finally, Adobe has integrated AI functionality into Photoshop, its flagship photo retouching and image processing software, in an attempt to remain competitive. However, some employees have expressed concern about the viability of the business model: Adobe’s main customers are graphic designers whose work is rendered partly obsolete by AI. Is the company digging its own grave? While we wait to find out, the sums invested are commensurate with the stakes: nearly 10 billion for Microsoft and Google. Enough to fuel the race for innovation.

The first AI films

Do you like AI images? You’re going to love AI films! Talented film makers are already achieving interesting results with short films made almost entirely by AI. It’s up to humans to write the instructions (including precise descriptions of the shots), choose and edit the images, improve the editing and add any additional effects. The AI does everything else! As in the American sci-fi short The Frost, or the film by French director Anna Apter, /Imagine. In video games, “non-player” characters are now capable of sustaining long, realistic conversations in response to the player’s questions.

Lonely companions?

In February 2023, Snapchat, the popular app for 15–25 year-olds, launched the chatbot My AI. The idea was to offer users the chance to chat with a simplified version of ChatGPT. My AI retains the user’s information, provides personalised advice, reassurance and consolation. There may be a risk of locking already vulnerable individuals into an exclusive relationship with the machine, rather than with other human beings. The WHO is openly concerned about the lack of care currently being taken in the use of generative AI. e.

Towards an age of widespread distrust

Is generative AI ushering in an age of ubiquitous fake news? For the time being, we are mostly amused by spoofs, such as the fake debate on Twitch between US President Joe Biden and his predecessor Donald Trump, full of comic banter. But what can be done if content misleads voters? Technical progress is dizzying, and AI-generated documents are very difficult to identify as such. The giants of the Net, like Google with SynthID or OpenAI with a visible watermark, are working on tools to mark texts, images and videos generated by AI.

The stakes are high: by 2026, nearly 90% of online content will be artificially produced, according to estimates by the European criminal police agency Europol.

Mistrust might also taint social relations. In early 2023, a Chinese businessman was robbed of the equivalent of 570,000 Euros following a video call: an AI-loving fraudster mimicked the voice and face of an acquaintance and easily convinced him to “lend” this sum of money.

In fact, AI could lead to a proliferation of scams, facilitated by the personal data available online: telephone contacts, photo galleries, videos on social media, etc. Will we one day have to share a password within the same family to prevent fraudulent identity theft? Or, worse still, will we have to introduce systematic protocols to prove that we belong to the human race, going beyond the commonplace captchas used today?